Social validation of collective annotations: Definition and experiment

Citation: Guillaume Cabanac, Max Chevalier, Claude Chrisment, Christine Julien (2010) Social validation of collective annotations: Definition and experiment. Journal of the American Society for Information Science and Technology (RSS)

DOI (original publisher): 10.1002/asi.21255

Semantic Scholar (metadata): 10.1002/asi.21255

Sci-Hub (fulltext): 10.1002/asi.21255

Internet Archive Scholar (search for fulltext): Social validation of collective annotations: Definition and experiment

Download: ftp://ftp.irit.fr/IRIT/SIG/2010 JASIST CCCJ.pdf

Tagged: Computer Science

(RSS) annotations (RSS), social media (RSS), information science (RSS)

Summary

This paper concerns 'social validation' based in annotation--determining the 'validity' by measuring the amount of consensus or controversy around a given document. The main experiment was a comparison between human-determined and algorithmically-computed social validation, and finds that they perform similarly, with the algorithms "approximat[ing] human perception in up to 84% of the cases."

Their determination of human-determined social validation was based on an online experiment. The arguments used were the 13 argumentative debates; this corpus, developed by the researchers, is available at http://www.irit.fr/~Guillaume.Cabanac/expe/corpus/ . 181 participants registered for the experiment; they count as participants the 121 people from 13 countries who evaluated at least one debate. Two major drawbacks were discussed: participants were largely non-native speakers of English (appropriate to the global Web but adding a layer of complexity) and the rating interface used quartiles, causing issues because 75% agreement might not intuitively correspond to "confirmation".

Two algorithmic approaches were considered. The authors detail three approachs for evaluating and determining the social validity of an annotation, and use the second two algorithms:

- the κ coefficient (Fleiss et al., 2003) - statistical measure of inter-rater agreement - not suitable because it does not take into account disputes.

- an empirical recursive scoring algorithm (Cabanac et al., 2005) - rates each comment based on whether it gives a positive or negative opinion and provides detail (e.g. are references given), and based on the overall agreement of other comments to it

- a system based in the Bipolar Argumentation Framework proposed in (Cayrol and Lagasquie-Schiex, 2005a,b) (detailing attack and defense arguments); this system was presented in Cabanac et al. (2007a).

However, based on the corpus, some information (e.g. annotator’s expertise and number of references) were not available, potentially under-rating the algorithms.

Each annotation is modeled as a pair <Objective Data, Subjective Information>. "Objective Data" is what is known or specified by the system, such as the timestamp, location within the document, and user information, and an identifier. Subjective Information captures the optional information:

- contents

- visibility

- expertise

- references

- annotation types: "Annotation types reflect the semantics of the contents (modification, example, and question) as well as the semantics of the author’s opinion (refute, neutral, or confirm)."

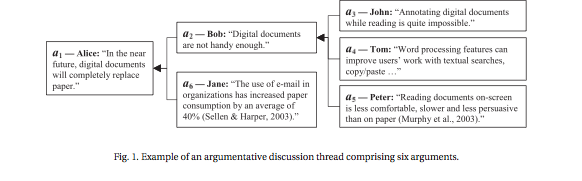

Here is the example given of an argumentative discussion:

Theoretical and Practical Relevance

Based on the study, evaluating a debate takes 5-6 minutes on average, indicating the effort required.

The paper contains some nice discussions about the differences between completing a study online versus in the lab; some data in that area might be reusable by other scientists.

The model of annotations could be reused.