Repeatability in computer systems research

Citation: Christian Collberg, Todd A. Proebsting (2016/02/25) Repeatability in computer systems research. Communications of the ACM (RSS)

DOI (original publisher): 10.1145/2812803

Semantic Scholar (metadata): 10.1145/2812803

Sci-Hub (fulltext): 10.1145/2812803

Internet Archive Scholar (search for fulltext): Repeatability in computer systems research

Download: https://doi.org/10.1145/2812803

Tagged: Computer Science

(RSS) reproducibility (RSS), open academia (RSS)

Summary

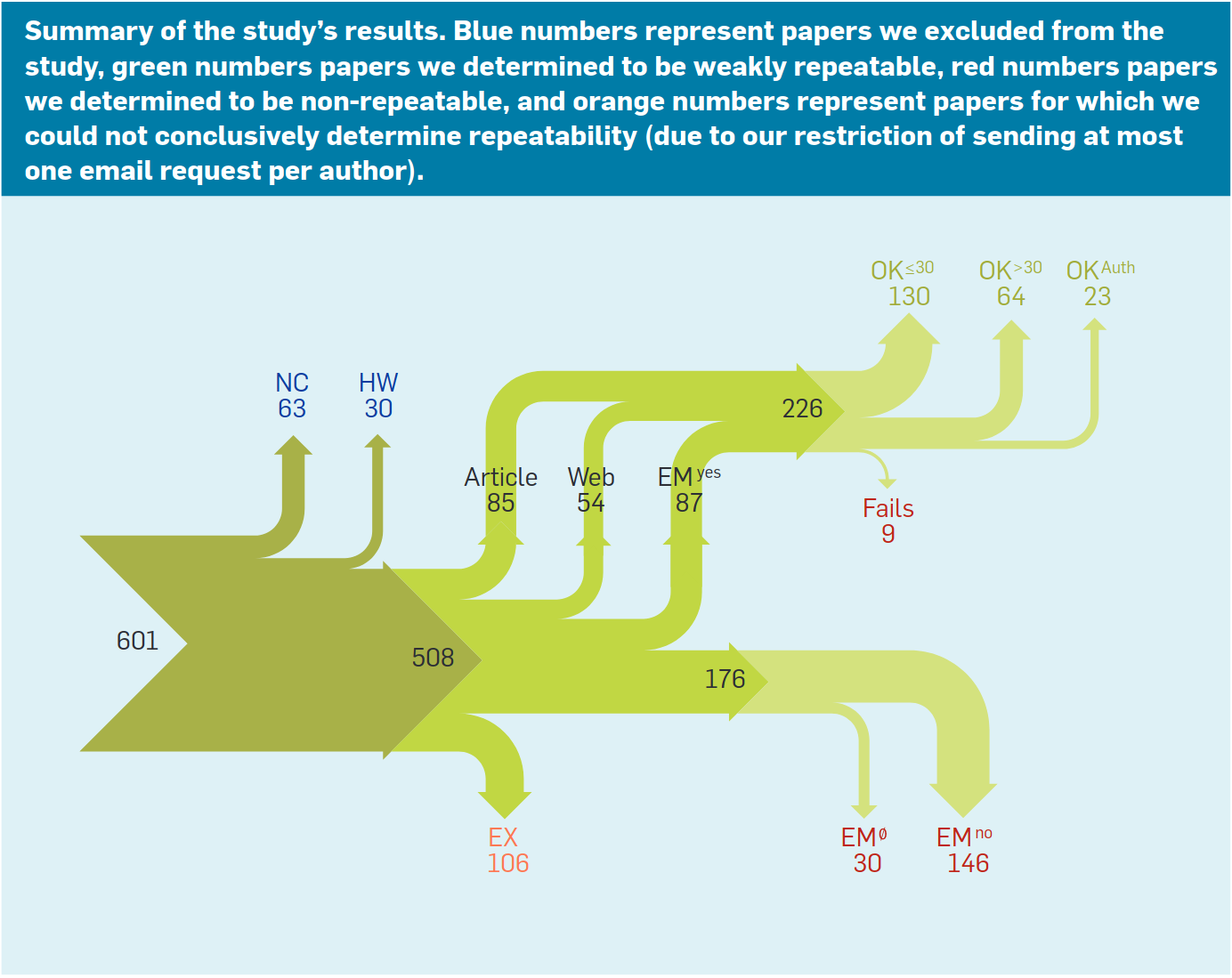

In this study, only 32% of publications from top conference (excluding some for technical reasons) are reproducible with minimal effort. Only 27% have source code publicly available. Research funding institutions should fund repeatability engineering, and journal editors should require the authors to state a "sharing contract," defining how they will share their artifacts, which are incorporated into peer review. Notably, this does not require the authors to share their code, just to specify at review-time whether their code will be shared.

Background

- The authors use different definitions of "reproducibility" than those used by Rougier and Hinsen.

- Repeatability: One can run identical code and get identical results as those published.

- Reproducibility: One can derive similar results as those published (either by writing new software according to the description or using the authors').

- Previous studies had asked "Is the code or data available," but this study also asks "will the code run out-of-the-box," which is necessary for reproducibility.

- Artifact evaluation is promising, but does not impact whether the manuscript is published.

Methodology

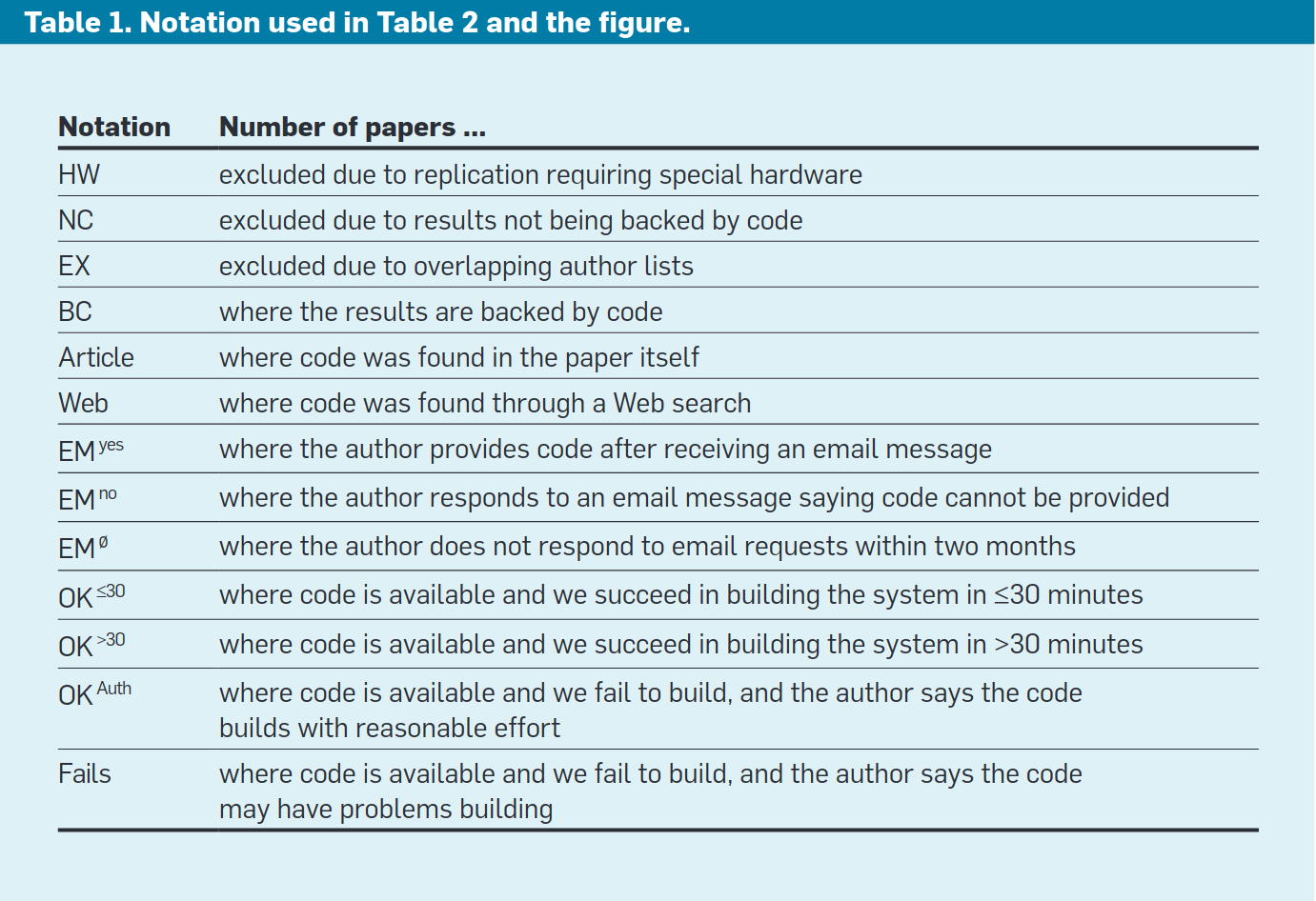

- 601 Papers from 8 ACM conferences (ASPLOS'12, CCS'12, OOPSLA'12, OSDI'12, PLDI'12, SIGMOD'12, SOSP'11, VLDB'12) and 5 journals (TACO'12, TISSEC'12/13, TOCS'12, TODS'12, TOPLAS'12).

- Removed those which required special hardware.

- Found source code from paper, from internet, or from email correspondence with the authors.

- Give RA 30 minutes to build code.

Results

- Common responses when code was not available ():

- Could not find exact version from paper.

- Not mature enough to release publicly.

- Editors note: They believe the bar for releasing code is more stringent than the bar for publishing the result of said code.

- "The code was never intended to be released so is not in any shape for general use."

- The one student who knows where the code is left.

- 32% repeatable by team in less than 30 minutes, 48% (including 32%) repeatable by team, 54% (including 48%) repeatable by team or authors.

Analysis

- Public-funding has the explicit mission of creating reproducible research, but empirically did not have an effect on reproducibility.

- Industry-involvement had a negative effect on reproducibility, probably because the industry partners have intellectual property restrictions.

- Published code does not necessarily correspond to the same version in the published article, but in many cases it does.

Recommendations

- Reviewers "take the expected level of repeatability into consideration in their recommendation to accept or reject".

- Funding agencies should fund requests for "reproducibility engineering". This will increase research output indirectly (making it easier to work off of the funded work).

- Many funding agencies already require funded work to be reproducible, but this is not enforced.

- Journals should require authors to state the conditions on which their work is shared ("sharing contract", different from license). It specifies e.g., will the authors provide support, where will they host data, for how long, etc.

- "The real solution to the problem of researchers not sharing their research artifacts lies in finding new reward structures that encourage them to produce solid artifacts, share these artifacts, and validate the conclusions drawn from the artifacts published by their peers. Unfortunately, in this regard we remain pessimistic, not seeing a near future where such fundamental changes are enacted."

Follow-ups

- After publication, some authors disputed their artifacts not building. Another team has attempted to rectify these issues. Many purported not building were found to be building, generally with more effort and skill by the tester. Follow-up work

- The original team has since released a technical report and raw data.